European Commission (EC)

The European Commission’s vision on Artificial Intelligence is based on the twin objective of excellence and trust. People’s safety and fundamental rights are at the centre of all efforts. At the same time, the EC works together with its Member States and other partners to foster socio-economic and environmental wellbeing through a flourishing science, business and innovation domain.

Artificial Intelligence (AI) technologies have a potential to significantly improve our economies and societies. It can help us, for example, to reach climate and sustainability goals and bring high-impact innovations in healthcare, education, transport, industry and many other sectors. However, certain AI applications if used without care, may sometimes create complexity, risk the disruption of activities and processes or even harm human rights.

In its efforts to address these two sides of the coin and offer clarity to all those who live, travel or develop their activities within the European Union (EU), the EC has recently proposed a set of rules that address specific risks and set the basis for the world’s first regulatory framework dedicated to the deployment and use of AI. Moreover, the EC together with all EU Member States as well as Norway and Switzerland, has developed a plan of concrete and coordinated action to support research, foster collaboration and increase investment into AI development and deployment.

Addressing AI Risks

While most AI systems pose limited to no risk and can be used to solve many societal challenges, certain AI systems may create risks that need to be addressed to avoid undesirable outcomes. For example, it is often difficult to find out why an AI system has made a decision or prediction and reached a certain outcome. So, it may become difficult to assess whether someone has been unfairly disadvantaged, such as in a hiring decision or in an application for a public benefit scheme.

Although existing legislation provides some protection, it is insufficient to address the specific challenges AI systems may bring.

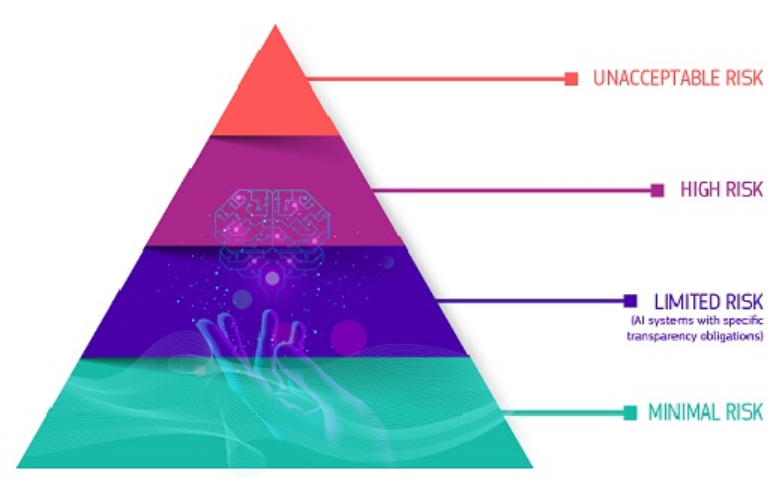

The regulatory framework proposed, suggests a risk–based approach, with four levels of risk:

Unacceptable risk: A very limited set of particularly harmful uses of AI that contravene fundamental rights and should be banned.

High-risk: A limited number of AI creating an adverse impact on people’s safety or their fundamental rights are subject to strict obligations before they can be put on the market.

AI with specific transparency requirements: For certain AI systems transparency obligations are imposed.

Minimal to no risk: All other AI systems, and this is the vast majority, can be developed and used subject to the existing legislation without additional legal obligations.

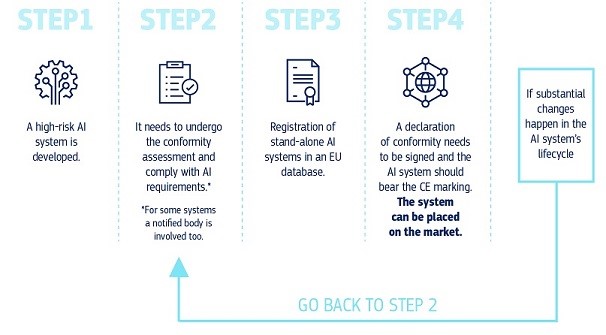

How does it all work in practice for providers of high-risk AI systems?

Once the AI system is on the market, authorities are in charge of the market surveillance, users ensure human oversight and monitoring, and providers have a post-market monitoring system in place. Providers and users will also report serious incidents and malfunctioning.

Future-proof rules

As AI is a fast evolving technology, the proposal is based on a future-proof approach, allowing rules to adapt to technological change. AI applications should remain trustworthy even after they have been placed on the market. This requires ongoing quality and risk management by providers.

Next steps with AI rules

Following the Commission’s proposal in April 2021, the AI regulation could enter into force in the second half of 2022 in a transitional period. In this period, standards would be mandated and developed, and the governance structures set up would be operational. The second half of 2024 is the earliest time the regulation could become applicable to operators with the standards ready and the first conformity assessments carried out.

Working together

In 2018, European Commission together with its Member States as well as Norway and Switzerland have agreed to work together on maximising investments to AI and preparing a framework within which these actions can operate. This “Coordinated Plan on AI” was updated in 2021 to translate this joint commitment into action with a vision to Accelerate, Act and Align.

Accelerate investments in AI technologies to drive resilient economic and social recovery aided by the uptake of new digital solutions.

Act on AI strategies and programmes by fully and timely implementing them to ensure that the EU fully benefits from first-mover adopter advantages.

Align AI policy to remove fragmentation and address global challenges.

Excellence in research and innovation

Through the Horizon Europe and the Digital Europe programmes under the programming period 2021-2027, at least EUR 1 billion per year will be invested. This funding should attract further public and private investments to a total of EUR 20 billion per year over the course of this decade. As one of the tools to achieve this objective, the Commission has launched the European Partnership for Artificial Intelligence, Data and Robotics, expected to drive innovation, acceptance and uptake of AI.

Monitoring the AI landscape in Europe

AI Watch is a project of the European Commission aiming to facilitate mutual learning and exchange between EU countries as well as identify synergies and collaboration opportunities. Launched in December 2018, AI Watch supports the Coordinated Plan on AI by monitoring AI related developments in the European Union.

Promoting societal and international dialogue

The European Commission has a strategic objective to support the development and use of AI for good and for all. To do that, it engages into a progressive dialogue that over the years democratised the policymaking process in Europe and brought discussions at an international level.

It all started in 2018, when the High-Level Expert Group on AI (AI HLEG) was set up as the steering group of the European AI Alliance. Followed by over 4000 representatives of industry, business, academia, civil society, national governments and citizens from all over the world, the AI Alliance forum facilitates exchange of information over every socio-economic aspect in the AI field.

The Ethics Guidelines for Trustworthy AI, a landmark document of AI Ethics, is the result of the AI HLEG’s work and the input provided by the AI Alliance community. Based on this common work, the European Commission has launched a series of consultations, for which this community has offered precious input. As an outcome, the EC’s White Paper was published in February 2020 and following a further public consultation the AI Regulatory Proposal and updated Coordinated Plan on AI, where presented in April 2021.

Today the European AI Alliance represents the EC’s ambition for an open forum where the future of AI in Europe and internationally can be discussed. Following the previous two editions of the European AI Alliance Assembly, the Commission together with the upcoming Slovenian Presidency of the European Council, is preparing a high-level event expected to turn this “ambition into action”!

Stay tuned and visit the European AI Alliance for more…